NVIDIA’s potential competitors

Refer Link: https://36kr.com/p/2555598308235400

Generative AI dominated the market, and NVIDIA, who took the first bite of the crab, emerged at the right time. Its market value has transformed from $500 billion last year to a trillion dollar giant on par with Amazon, Google, and others.

Selling graphics cards for 300000 yuan each and counting the money received by other tech giants, NVIDIA’s consistently breaking performance confirms how popular graphics cards are in the field of data center AI training.

However, places with excess profits will always face the impact of competitors, let alone the rapidly changing technology track. AMD, Intel, and even technology giants preparing to develop their own chips are eager to try. Who will break NVIDIA’s moat?

Forbes magazine commented, “If there are still potential competitors for NVIDIA in the industry, it must include Su Zifeng and her AMD.”

How to defeat the big boss in the chip market?

AMD has the most say in this question. The company once forced Intel’s market share in data centers and PCs from almost monopoly to 60%, relying on leading processes and architectures, and continuously catching up CPU products.

Can AMD catch up again with the strong momentum of NVIDIA this time?

Will the next trillion dollar chip giant be AMD?

01

At first, the two companies formed a mismatch competition in product development and market selection, and NVIDIA has been deeply rooted in the field of image graphics cards, leading the way in high-end chips. Before launching a frontal attack on NVIDIA, AMD had overturned the CPU dominance of PCs and data centers – Intel – with its new Zen architecture and TSMC’s 7nm technology.

At present, NVIDIA stands out in the GPU field, but AMD’s product architecture is more diverse, with both X86 CPU and independent GPU products laid out on the PC side. Compared to traditional CPUs, the use of integrated graphics cards has stronger image rendering capabilities, laying the foundation for AMD’s rapid entry into the AI acceleration chip field competition in the future.

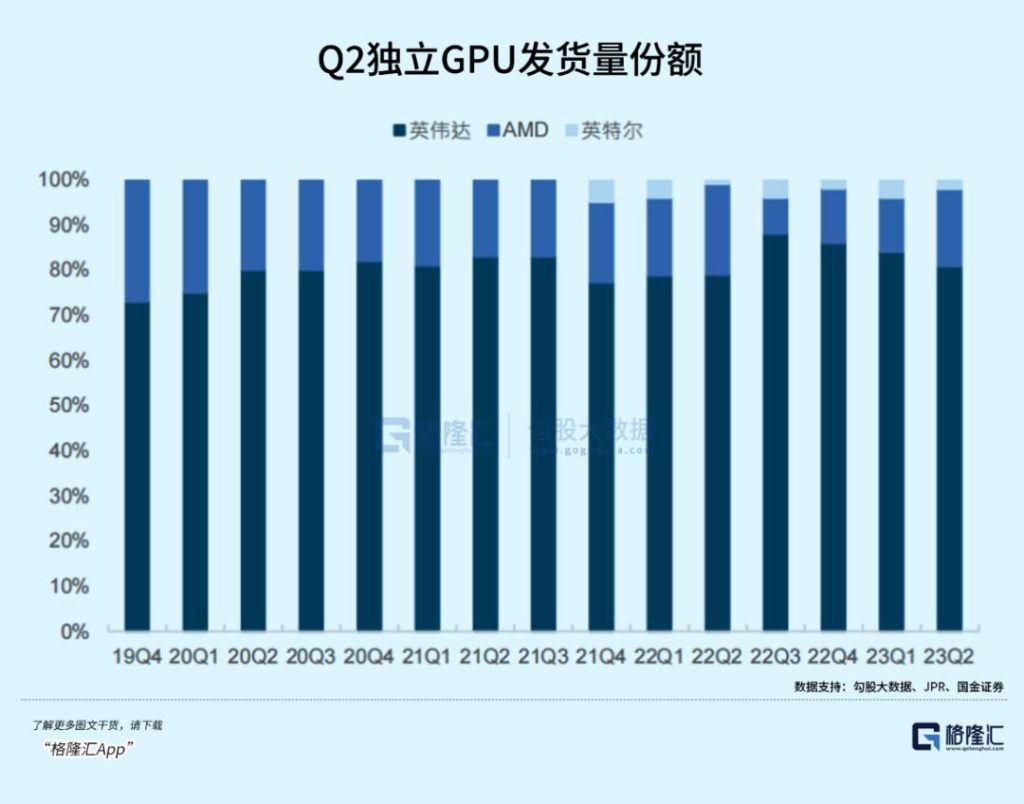

According to Statista, in the PC CPU market in the third quarter of 2023, AMD ranked second only to Intel with a market share of 35%; In terms of independent GPUs, NVIDIA holds a leading advantage with over 70% of the market share, while AMD, also ranked second, accounted for 17% of global shipments in the second quarter of this year.

Overall, in the field of GPU graphics cards, NVIDIA has built a strong barrier to leading computing power and software ecology, resulting in a higher user experience and a greater advantage in the high-end flagship market.

AMD graphics cards not only pursue graphics rendering performance, but also focus on improving general computing performance. In entry-level graphics cards, the cost-effectiveness is higher. However, in developer environments, AMD’s OpenCL is actually far less comprehensive than CUDA, and software incompatibility issues can affect the ecological network value of AMD chips.

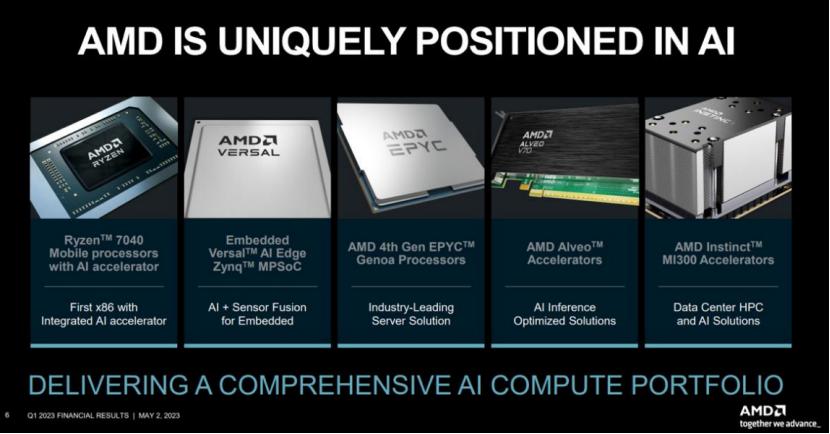

It is precisely because AMD completed the layout of two types of processor chips earlier that this comprehensiveness has given AMD the opportunity to build a more diverse AI product matrix, including the Ryzen 7040 series CPU that integrates Ryzen AI, the Versal AI adaptive data center platform, Alveo accelerator, and the fourth generation EPYC Genoa processor, as the AI big model accelerates its popularity and various intelligent terminal hardware can be integrated in the future, And the upcoming Instact MI300 has been announced.

Among them, the MI300 is considered the most promising candidate to challenge NVIDIA‘s position and replicate its successful breakthrough against Intel in 2016.

AMD launched this Instact MI300 accelerator at the CES 2023 conference, which is the first data center level APU product. It was first introduced by AMD in 2011. Simply put, it encapsulates the CPU and GPU together, specifically for AI language large model training and inference, benchmarking against NVIDIA’s Grace Hopper (Grace CPU+Hopper H100 GPU).

As a product that can rival the H100 in AI training, this MI300 is very close to NVIDIA’s GH series in terms of specifications and performance in terms of chip architecture, process, computing power, and memory bandwidth. However, the backwardness of the software ecosystem may not temporarily shake NVIDIA’s customer stickiness in training.

Firstly, looking at the chip architecture, the MI300 is AMD’s first product that combines 4 CPUs with CNDA 3 GPUs. It adopts 3D stacking technology and Chipet design, equipped with 9 chipsets based on the 5nm process, which is on par with NVIDIA Grace Hopper’s 4nm process (belonging to TSMC’s 5nm system).

The number of MI300 transistors has reached 146 billion, more than NVIDIA H100’s 80 billion. The MI300 is equipped with 24 Zen 4 data center CPU cores and 128 GB HBM3 memory, and operates in an 8192 bit wide bus configuration.

In terms of computing power, the FP32 of the previous generation MI250X achieved 47.9 TFLOPS, surpassing NVIDIA A100’s 19.5 TFLOPS, but its release time was after NVIDIA. AMD has not released a comparison of the computing power between the MI300 and H100. We only know that compared to the previous generation MI250X, the MI300 is expected to increase by 8 times, and the energy consumption level (TFLOPS/watt) will be optimized by 5 times. It can be inferred that this performance improvement is expected to be close to the Grace Hopper level.

In terms of memory bandwidth, high capacity and graphics memory bandwidth are the advantages of MI300, which are 2.4 times and 1.6 times higher than NVIDIA H100, respectively. Due to the significant increase in memory capacity, a single MI300X chip can run an 80 billion parameter model.

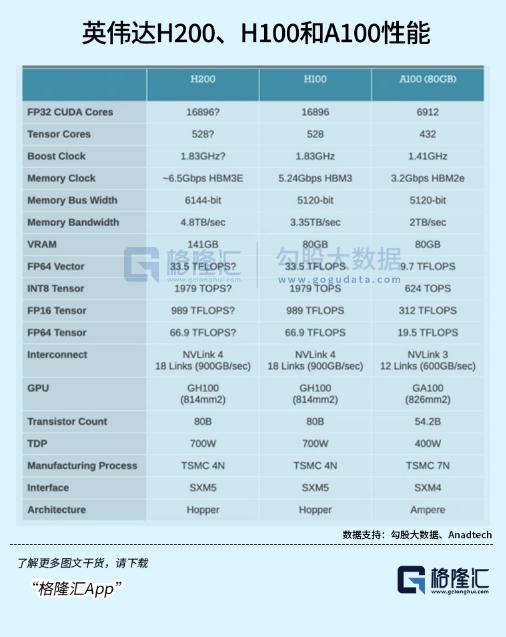

Recently, NVIDAI released H200 in response to MI 300, with a focus on upgrading memory bandwidth. H200 has up to 141GB of graphics memory, with a bandwidth increase from 3.35TB/s to 4.8TB/s. Its memory capacity exceeds MI 300’s 128GB, and its bandwidth is slightly inferior to MI 300 at 1.6 times that of H100.

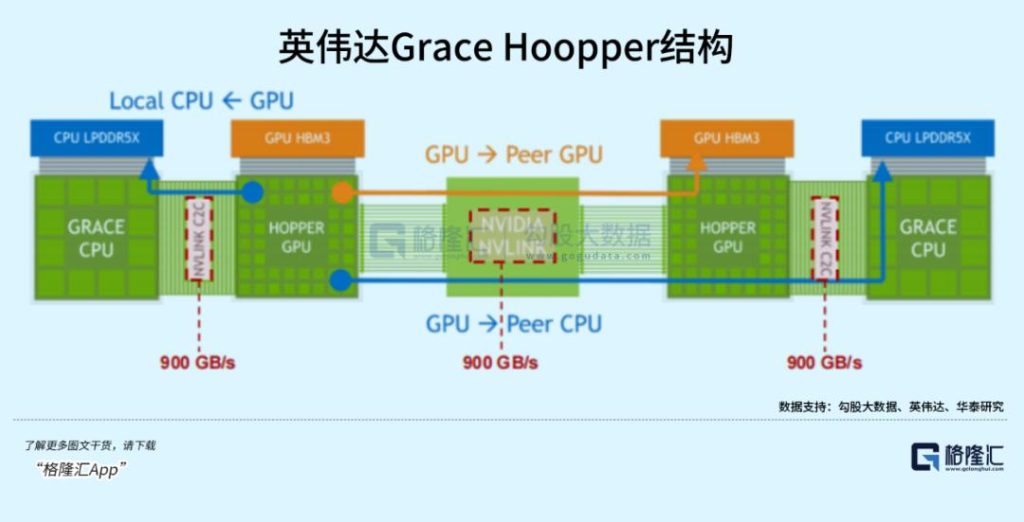

The massive data computation and transmission brought about by the increase of model parameters in AI training inference have put forward higher requirements for the data transmission speed between GPU-CPUs.

Grace Hooper uses NVLink C2C and NVLink Switch to interconnect CPU-GPU and GPU-GPU. As memory sharing peers, both parties can directly access each other’s corresponding memory space, supporting high bandwidth memory access of 900GB/s and up to 150TB, effectively solving the pain point of “insufficient local memory on a single node” in large-scale parallel computing of GPUs. The advantages are more prominent.

AMD has not yet announced the transmission bandwidth of the MI300, but the 3D Chiplet architecture allows its internal CPU and GPU to share the same memory space, so that the CPU does not need to copy data first when performing calculations, reducing the usage of memory bandwidth. The new generation storage chip HBM3 used in MI300 has a memory bandwidth of approximately 819GB/s, which is similar to the NVLink C2C 900GB/s bandwidth of NVIDIA.

The MI300 may already be very close to NVIDIA’s leading computing standards in terms of specifications and performance, but the real killer of the latter is the CUDA ecosystem, which has accumulated a first mover advantage through long-term cultivation, paired with chips. In fact, by referring to the combination of Microsoft operating system and Office production tools, one can understand that a continuously improving software ecosystem will increase user stickiness, form a positive cycle, and further iterations will also increase conversion costs. NVIDIA’s global CUDA developers have reached 2 million in 2020 and doubled in 2023.

AMD’s ecosystem is ROCm, with its main customers being research institutions. Compared to CUDA, it is already able to widely support NVIDIA’s multiple product lines. Currently, ROCm only supports some SKUs of the Instact series GPUs, including the Radeon Pro W6800 and Radeon Pro V62.

CUDA has been supporting Linux and Windows since version 1.0. ROCm just announced its login to Windows in April this year, but only supports Radeon Pro W6800, Radeon RX 6900 XT, and Radeon RX 6600. In Q1 of this year, the company announced the integration of the PyTorch 2.0 framework into the ROCm system, and TensorFlow and Caffe deep learning frameworks have also been added to the fifth generation ROCm. Currently, ROCm can correspond to some of CUDA’s content. However, due to its late start, ROCm is more commonly used for HPC (high-performance computing), and its coverage of scenarios is not as comprehensive as CUDA.

AMD CEO Su Zifeng stated that he plans to expand production of the MI300 series chips, and relevant samples have been delivered to customers for testing. It is expected to be sold in bulk in 2024. With the gradual improvement of the ROCm platform toolchain, the shortcomings in the ecosystem may be quickly filled with the positive response of other manufacturers. The combination of pricing will continue the high cost performance style of the past, greatly reducing the difficulty of opening up the market.

02

As of now, NVIDIA and AMD have both released their latest financial reports and performance guidelines.

Overall, both companies have had many surprising financial reports.

NVIDIA’s revenue for the quarter was $18.12 billion (YoY+206%, MoM+32%), significantly higher than the market’s consensus expectation of $16.1 billion and far beyond NVIDIA’s own guidance range of $15.68 billion to $16.32 billion. This is mainly due to the explosive growth of data center performance, with data center revenue of $14.514 billion, a year-on-year increase of 279% and a month on month increase of 38%, accounting for 80% of total revenue, far exceeding market expectations of $12.82 billion.

Due to the explosive growth in product demand, prices continued to soar, and NVIDIA’s gross profit margin for the quarter increased significantly by 20.4 percentage points year-on-year and 3.9 percentage points month on month, reaching 74%.

In terms of net profit, NVIDIA’s GAAP net profit for the quarter was 9.24 billion US dollars, a significant increase of 1259% year-on-year and 49% month on month. Non GAAP net profit for the quarter was 10.02 billion US dollars, an increase of 588.2% year-on-year and 48.7% month on month, respectively, both significantly exceeding the market’s consensus expectation of Non GAAP net profit of 8.4 billion US dollars.

By contrast, AMD achieved a revenue of $5.8 billion in the third quarter, a year-on-year increase of 4.22% and a month on month increase of 8.23%, slightly higher than the previous guidance of $5.7 billion. The net profit for the third quarter was $299 million, a year-on-year increase of 353% and a tenfold increase compared to the previous period, but mainly due to the low base in the previous period. This is the first time that quarterly net profit has achieved a year-on-year positive growth after seven consecutive quarters of year-on-year decline, which has excited investors.

In the third quarter, AMD’s gross profit margin was 47%, an increase of 5 percentage points and a month on month increase of 1 percentage point. Although it also benefited from the explosive demand for AI chips, it was significantly lower than NVIDIA, the leader of the dividend. At the same time, this huge profit margin gap reflects the gap in product competitiveness and bargaining power. Although AMD’s graphics card technology has made great strides in recent years, there is still a long way to catch up with NVIDIA.

In fact, NVIDIA’s net profit for the quarter was more than 30 times that of AMD, and the difference between the two can be said to be no longer on the same channel.

In the third quarter, AMD’s data center revenue was $1.598 billion, a year-on-year decrease of 1%, accounting for 28% of the revenue; The operating profit was 306 million US dollars, a year-on-year decrease of 40%, for three consecutive quarters of decline.

However, what concerns the market the most is their future performance expectations.

In terms of performance guidance, NVIDIA has given a less optimistic expectation, with an expected revenue of approximately $20 billion (± 2%) in FY24Q4, and gross profit margins of 74.5% and 75.5% for GAAP and Non GAAP, respectively. The main reason is that it is expected that the gaming business will experience a certain degree of decline in the fourth quarter. Especially in China and other regions affected by the US government’s new export restrictions in October, sales will significantly decline.

AMD’s performance guidelines have surprised investors even more, with an expected revenue of $6.1 ± 3 billion in the fourth quarter and a gross profit margin of 51.5% (month on month+4.5pcpt). CEO Su Zifeng stated during a conference call that several large-scale cloud computing companies have promised to deploy the MI300, which will become AMD’s fastest product in history to generate over $1 billion in revenue and is expected to reach $2 billion in sales revenue next year.

Due to strong performance guidance, AMD’s stock price closed up 9.7% on Wednesday after releasing its results, and continued to rise in the following days.

Interestingly, from the perspective of regional revenue structure, the customer targets of the two companies are also relatively overlapping.

In 2022, 30.74% of NVIDIA’s revenue comes from the United States, followed by 25.9% from Taiwan, China, and 21.91% from mainland China (including Hong Kong), which means that a total of 47.81% of NVIDIA’s revenue comes from China, nearly half of which.

However, according to the latest data, due to the explosive demand for AI graphics cards and the US government’s regulatory policies restricting exports to China, the third quarter’s revenue from the US mainland has surged. At the same time, according to performance guidelines, NVIDIA’s high-end graphics card exports to China will significantly decline in the fourth quarter, and the revenue structure in this region will undergo significant changes.

However, NVIDIA seems to have quickly found an alternative solution. According to the CFO, NVIDIA is developing new computing power series chips for China – HGXH20, L20PCle, and L2PCle, all of which are derived from the H100 model and can comply with relevant US regulations.

In terms of AMD, the revenue from the United States accounts for 34.1%, while the revenue from China (including Hong Kong and Taiwan) accounts for a total of 32.1%. Although the proportion is not as high as NVIDIA, the sales revenue growth in China has been significant in recent years.

That is to say, NVIDIA’s business in China is limited, which may leave a gap for AMD. Although AMD is also limited by the impact of US restrictions, it is somewhat of a potential opportunity to compete again on the same platform in the future.

At present, in order to quickly bridge the gap with NVIDIA, AMD is rapidly acquiring AI and data related assets on a large scale, attempting to accelerate the integration of AI puzzles and pave the way for future data center business.

On April 4th this year, AMD announced a $1.9 billion acquisition of DPU chip manufacturer Pensando to expand its data center solutions.

On August 24th, AMD acquired AI startup Mipsology to enhance its artificial intelligence reasoning software capabilities.

On October 11th, AMD announced the acquisition of open source artificial intelligence company Nod.ai to increase its competitiveness in the AI market.

Meanwhile, in terms of research and development, AMD’s R&D expense rate has consistently exceeded 20% in recent years and continues to rise. In the third quarter, AMD’s R&D investment was $1.507 billion, a year-on-year increase of 17.83%, and the R&D expense ratio reached 25.9%, a year-on-year increase of 32.9% (corresponding to NVIDIA’s latest financial report of R&D investment of $2.04 billion, a year-on-year increase of 11.84%, R&D expense ratio of 23.9%, a year-on-year increase of 12.58%).

03 Episode

The vision of artificial intelligence empowering intelligent terminals is being realized, and the AI big model points out the next potential market for chip manufacturers such as NVIDIA, AMD, and Intel. Whether it is data centers or terminals such as PCs and smartphones, the demand for chip upgrades and high-value trends will drive them to accelerate their research and development and product launch pace.

Although AMD still lags behind NVIDIA in many aspects overall, it has fully demonstrated significant growth potential that cannot be ignored.

It can be said that in the field of AI acceleration chips in the future, the fierce competition between NVIDIA and AMD will continue.